Misleading presentation of Biden gaffe likely boosted by a bot-like network

Hoaxlines Lab • Nov 20, 2021

An examination of users retweeting two or more of the RacistJoe-related tweets analyzed by Hoaxlines revealed traits associated with platform manipulation. That the misleading campaign trended on Twitter, drowning out the most relevant voices like that of the President of the Negro Leagues Museum demonstrates how a small group could effectively silence opposition.

At least two users in the dataset appeared to target the username “POTUS.” Targeting the US President may not achieve the goals ordinarily associated with social media harassment, which are “to silence members of civil society, muddy their reputations, and stifle the reach of their messaging,” according to Mozilla Fellow Odanga Madung. Still, Hoaxlines documented the findings for potential research value. Twitter platform manipulation policies already address many problematic behaviors observed by Hoaxlines, but enforcement is inconsistent, leaving the United States vulnerable to domestic and foreign manipulation.

Key findings:

- Hoaxlines’ examination of accounts retweeting two or more #RacistJoe-related tweets revealed that many accounts had traits associated with inauthentic accounts. That is, an online entity (a social media account or a website) misleading about who it is or what it aims to achieve. Inauthentic behavior and platform manipulation do not necessarily indicate that activity is automated.

- Users that retweeted more than one of the #RacistJoe-related tweets have some or all of the following: blank profiles, formulaic handles, recently created profiles, dormant accounts, no identifiable information, and a narrow range of interests not found with genuine accounts.

- Multiple users retweeting two or more of the RacistJoe-related content tweeted at a rate that Oxford Internet Institute’s Computational Propaganda project (averaging over 55 tweets per day) and the Digital Forensics Lab (averaging over 75 tweets per day) have both classified as indicative of inauthentic activity, like tweeting as many as 1000 times per day or 150 times per hour.

- Data visualization of the network revealed a formation, seen in past inauthentic campaigns, used to boost content across time and space.

- A group of 646 users who retweeted two or more #RacistJoe-related tweets had creation dates that skewed toward 2020 and 2021, something common to information operations regardless of who is behind them.

- The activity in the #RacistJoe dataset remained concentrated around a handful of tweets as the network grew larger, which conflicts with what we expect from a trending topic that takes off naturally. A landmark social bot study in Nature (2018) stated: “… as a story reaches a broader audience organically, the contribution of any individual account or group of accounts should matter less.”

Backstory: The lie beneath the hashtag

Videos and articles spread alongside the hashtag #RacistJoe in response to an address from President Joe Biden. The full video clip—which includes a gaffe that initially sounded like Biden called Satchel Paige “a great Negro”—went viral on November 11, 2021. Biden has publicly struggled with a stutter, and that appears to have played a role in his reference to Paige, an iconic player who played in the Negro Leagues before ending his career in the majors.

Verbatim, Biden said:

“I’ve adopted the attitude of the great Negro at the time--pitcher in the Negro Leagues--who went on to become a great pitcher in the pros-in major league baseball after Jackie Robinson. His name was Satchel Paige.”

The raised hand and pause, which can be seen on the video, followed by a correction, clarifies that Biden did not intend to refer to Paige as “a great Negro,” but as a great “pitcher in the Negro Leagues.” Biden’s verbal stumbles are common among those with a stutter, a neurological disorder that can constitute a significant hardship. Those who struggle with a stutter have drawn hope from Biden’s success.

Americans exploiting Biden’s condition are following a mold set by adversaries in 2020. Foreign state-controlled media used stutter-related gaffes to spread disinformation about Biden's mental health in the months leading up to the 2020 election, a fact that was withheld without just cause from the public for months.

R.T., an outlet the U.S. National Intelligence Council classifies as Russian state-controlled media that has faced criticism for recent German election interference, quickly published an article echoing the sentiments of a domestic provocateur:

- Fact-checkers rush to explain how Biden didn’t MEAN to call Satchel Paige ‘the great Negro’ from RT.

The President of the Negro League Museum, Bob Kendrick, retweeted in response to #RacistJoe trending:

AP reported on one of the more glaring mischaracterizations of Biden’s verbal stumble in the article “Fox News edit of Biden comment removes racial context.” AP admonished Fox, saying:

When editing video, journalists have an obligation to keep statements in the context they were delivered or explain to viewers why a change was made, said Al Tompkins, a faculty member at Poynter Institute, a journalism think tank. In this case, the edit is not at all clear, he said.

The tweets and users engaging #RacistJoe-related content

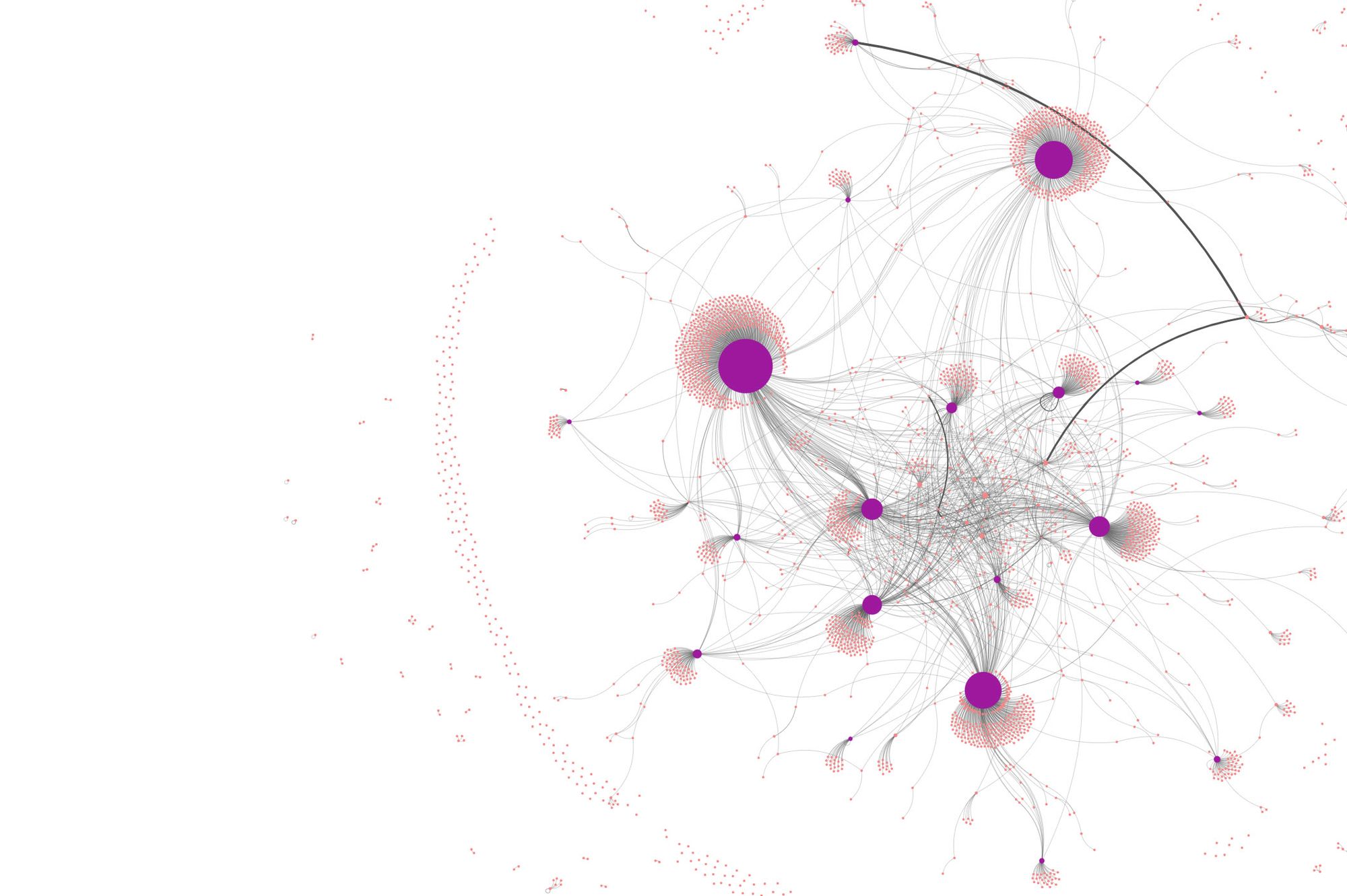

The first image, Figure 1, shows the network as it was on November 12, still growing. As the number of nodes—here, those are Twitter users—increases, we expect the importance of any single user to decline. Figure 1 shows the hashtag network with influencers colored magenta. Influencers are the most influential nodes, meaning that together the count of those nodes and the nodes connected to those nodes is maximized. Put another way, these are well-connected users who can exert influence on others.

For more about identifying influencers, read Heuristic for Network Coverage Optimization Applied in Finding Organizational Change Agents.

We identified the influencers at this early stage (November 12) because we expect in an organic network that the importance of any single node should decline as the network size increases. On November 12, there were a little over 2000 nodes. We can see key tweets are larger because they have more incoming links (indegree), which may reflect retweets, likes, or quotes.

Figure 2 shows the network on November 15. The network had reached 3,512 nodes, but activity remained concentrated around the key nodes from November 12. This goes against what we would expect as a network grows.

A 2018 study published in Nature, “The spread of low-credibility content by social bots,” reported an inauthentically spreading article:

The more a story was tweeted, the more the tweets were concentrated in the hands of a few accounts, who act as “super-spreaders.”

Other examples make clear how similar the structures for boosting false and misleading claims are across time and space.

Hoaxlines found a handful of tweets is the focus of concentrated activity. Here are some of the tweets:

We also found a series of tweets by searching #RacistJoe on Twitter. Although these tweets did not use the hashtag, many of the accounts posting the content also tweeted using “racist” and “Joe.” This illustrates how a single hashtag may not cover all related content.

In the case of #RacistJoe, many popular tweets conveying some version of the idea “Biden is racist” or “Biden called Satchel Paige a Negro,” did not contain the hashtag.

For this reason, we compared users retweeting #RacistJoe with users retweeting tweets that did not include the hashtag but still surfaced in Twitter searches for “RacistJoe,” likely because of replies containing the hashtag.

Overlap in the retweet network

Looking at individual accounts should play a role in determining whether inauthentic activity has taken place, but network analysis often provides the most objective and clear evidence. The advancement of social bots in terms of convincing appearance has meant that researchers must rely more on network-level detection.

Indegree (incoming links) and outdegree (outgoing links) centralities tell us who is doing the retweeting, liking, replying, and quoting and who is the beneficiary of those engagements.

Examining retweeters of some of the most popular tweets referencing or using the hashtag 'RacistJoe," we found a group of 646 users had retweeted two or more times.

This set of users were disproportionately likely to have been created in the years 2020 and 2021. Researchers have observed a skew toward recently created profiles in information operations across time and with different groups behind the efforts.

The skew toward recent creation dates has also been observed in data collected using Black Lives Matter (BLM) hashtags. BLM has been heavily targeted by domestic and foreign groups since its inception. The three accounts in Figure 8 retweeted all five #RacistJoe-related tweets.

The three accounts had creation dates within the last 1–2 years, a common trait found in inauthentic accounts, expressed a narrow range of interests not found in a group of genuine accounts, and also lack identifying information, as is common with accounts created to manipulate social media platforms and influence people.

Critically, these traits exist together in multiple users found in this network.

Accounts retweeting multiple #RacistJoe-related tweets

One account that retweeted both ElectionWiz and DiscloseTV had a daily tweet count that exceeded 1000 tweets in a single day, suggesting a human is unlikely to be responsible. We also found this user tweeted at a rate that broke 150 tweets per hour and almost exclusively retweeted, with a retweet rate over 99%.

First Draft advises that more than 100 tweets per day may indicate an account is at least partially automated, but that is the upper limit of estimates from respected research organizations. DataJournalism.com wrote of account activity:

Researchers at the Oxford Internet Institute’s Computational Propaganda Project classify accounts that post more than 50 times a day as having “heavy automation.” The Atlantic Council’s Digital Forensics Research Lab considers “72 tweets per day (one every ten minutes for twelve hours at a stretch) as suspicious, and over 144 tweets per day as highly suspicious.”

Another user who retweeted Lavern_Spicer, DiscloseTV, and ForAmerica had an exceptionally high retweet rate and tweeted over 600 times in a single day on November 6. The account also broke 125 tweets per hour recently, or a rate of more than two tweets per minute.

A third user who retweeted ElectionWiz, ForAmerica, and PapiTrumpo regularly broke 100 tweets per hour and had a retweet rate of over 90%. Hoaxlines found over 800 tweets in a single day from this account and the following recently used hashtags: #RacistJoe (7), #IStandWithSteve (4), #KyleRittenhouse (4), #KyleRittenhouseTrial (4), #LetsGoBrandon (4), #COVID19 (3), #FJB (3), #RINO (3), #RacistJoeBiden (3), #Rittenhouse (3).

The singular focus on hashtags promoted by right-wing influencers matches one of four universal traits—a sole tweeting purpose—reported in a large-scale study (2021) examining the bots traits over time with multiple datasets from different studies.

Hoaxlines also created a word cloud from the 646-user retweet group using profile bios to see which terms were in profiles and how frequently. The most common phrase was “not set,” the default setting. Although real users sometimes leave their bios blank, blank profiles occur much more frequently with accounts used for platform manipulation.

Below are profiles of highly active accounts—in the top 100 most active in the #RacistJoe dataset—we found engaging content related to #RacistBiden.

Why does platform manipulation matter

Inflating engagement of tweets through retweets or replies, for example, creates the illusion of popularity. Bad actors can leverage the illusion to direct public discussion, influence opinion without disclosing financial backing, or it can be used to disinform or to discourage opponents through targeted harassment.

Concerning a case where coordinated Twitter campaigns targeted civil activists, activists said: “they now self-censor on the platform.” An in-depth investigation by Mozilla included interviews with influencers who had accepted payments to tweet.

“They were told to promote tags—trending on Twitter was the primary target by which most of them were judged. The aim was to trick people into thinking that the opinions trending were popular—the equivalent to ‘paying crowds to show up at political rallies,’ the research says.”

Here the issue is not what opinion someone has but whether that opinion has been unethically influenced using information operations. Bots are permissible on Twitter, so the fact that the account is automated is not the problem. It’s platform manipulation or automated accounts used in deceptive ways that are not permissible. Enforcing these policies is absolutely critical.

With no accountability and anonymity, unfettered platform manipulation poses a grave threat to national security that could be weaponized at a critical time, like a natural disaster or a natural outbreak. The Office of the Director of National Intelligence declassified a report in August 2021 that conveys deep concern:

“Russia presents one of the most serious intelligence threats to the United States, using its intelligence services and influence tools to try to divide Western alliances, preserve its influence in the post-Soviet area, and increase its sway around the world, while undermining US global standing, sowing discord inside the United States, and influencing US voters and decision-making.”And, “Cyber threats from nation-states and their surrogates will remain acute. Foreign states use cyber operations to steal information, influence populations, and damage industry, including physical and digital critical infrastructure. Although an increasing number of countries and non-state actors have these capabilities, we remain most concerned about Russia, China, Iran, and North Korea. Many skilled foreign cybercriminals targeting the United States maintain mutually beneficial relationships with these and other countries that offer them safe haven or benefit from their activity.”